Shakti LLMs: Driving On-Device AI and Workplace AI Agents

In today’s rapidly evolving enterprise landscape, the demand for compact and efficient AI solutions has never been greater. The shift from centralized, cloud-reliant AI systems to on-device AI reflects the need for low-latency, privacy-centric, and energy-efficient models. At the forefront of this transformation are Shakti LLMs, which set a new benchmark for enabling intelligent agents to operate seamlessly across mobile devices, IoT systems, edge servers, and enterprise platforms.

This article delves into benchmark comparisons, throughput performance, and real-world applications, illustrating why Shakti LLMs are uniquely positioned to drive the next wave of AI innovation in on-device deployments and workplace transformation.

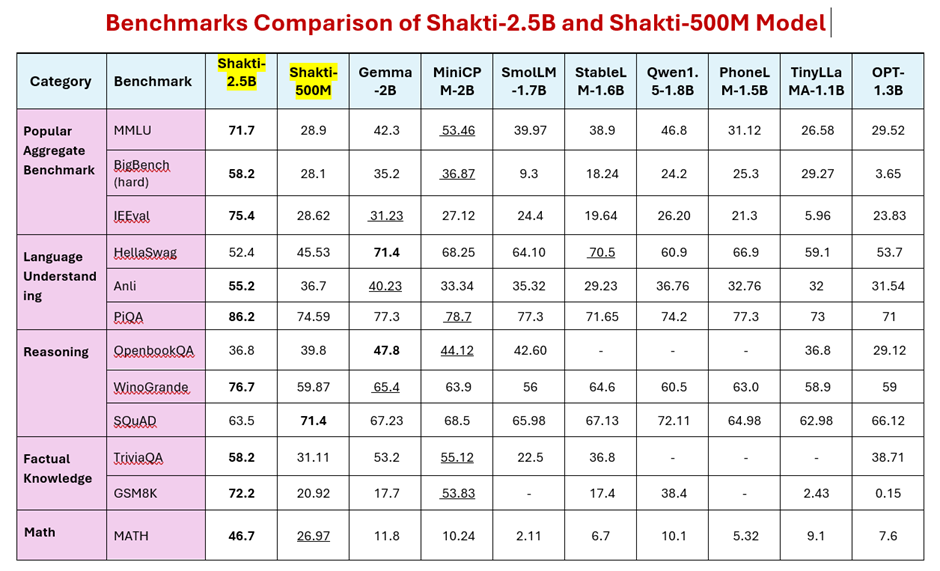

Benchmark Comparisons: Shakti vs. Competitors

The Shakti 2.5B and 500M models have been benchmarked against prominent alternatives such as MiniCP-2B, Gemma-2B, Qwen 1.5B, and TinyLLaMA.

Competing with models like MiniCP-2B, Gemma-2B, Qwen-1.5B, SmolLM2-360M, and TinyLLaMA, Shakti LLMs have consistently demonstrated superior performance in reasoning tasks, throughput efficiency, and scalability for enterprise applications.

Here are the highlights:

Popular Aggregate Benchmarks:

- MMLU: Shakti-2.5B dominates with 71.7%, outperforming MiniCP (53.46%) and Qwen (46.8%), showcasing superior multitask learning.

- BigBench (Hard): Shakti-2.5B achieves 58.2%, significantly surpassing MiniCP (36.87%) and Gemma (35.2%).

Language Understanding:

- HellaSwag: MiniCP (68.25%) and Gemma (71.4%) lead, while Shakti-2.5B (52.4%) trails slightly, highlighting an area for improvement.

- Anli: Shakti-2.5B stands out with 55.2%, leading competitors like Gemma (40.23%) and TinyLLaMA (32.76%).

Reasoning:

- PIQA: Shakti-2.5B delivers an exceptional 86.2%, outperforming MiniCP (78.7%) and Qwen (74.2%), solidifying its position in reasoning-heavy tasks.

- WinoGrande: Shakti-2.5B scores 76.7%, leading Qwen (60.5%) and TinyLLaMA (58.9%).

Factual Knowledge:

- TriviaQA: Shakti-2.5B’s 58.2% outpaces Qwen (55.12%) and TinyLLaMA (36.8%).

- SQuAD: Shakti-500M achieves 71.4%, surpassing larger models like TinyLLaMA (62.98%).

Math:

- MATH: Shakti-2.5B leads with 46.7%, outperforming TinyLLaMA (9.1%) and OPT (7.6%).

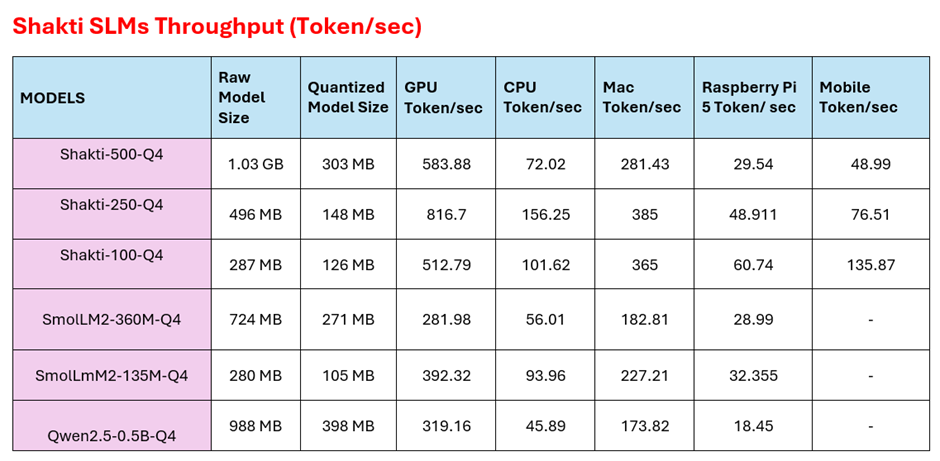

Throughput Comparison: Efficiency Redefined

Throughput, a critical metric for on-device and edge deployments, highlights Shakti’s efficiency compared to competitors:

GPU Token Throughput:

- Shakti-500M: 583.88 tokens/sec, doubling the throughput of SmolLM2-360M (281.98 tokens/sec) and Qwen (319.16 tokens/sec).

- Shakti-250M: 816.7 tokens/sec, setting a new benchmark for mid-sized models.

CPU Token Throughput:

- Shakti models maintain strong performance: Shakti-500M: 72.02 tokens/sec, higher than Qwen (45.89 tokens/sec) and SmolLM2-360M (56.01 tokens/sec).

Mobile and Edge Devices:

- Shakti-100-Q4 leads with 135.87 tokens/sec, outperforming alternatives, making it ideal for IoT and mobile deployments.

- Raspberry Pi 5: Shakti-500M achieves 29.54 tokens/sec, outperforming other compact models like Qwen (18.45 tokens/sec).

The Growing Need for On-Device AI Agents

On-device AI agents represent the future of enterprise productivity. Unlike traditional cloud-based AI systems, they leverage local hardware resources to perform inference tasks, making them a superior choice for the following reasons:

- Data Privacy and Security: Processing data locally eliminates the risk of sensitive information being transmitted over networks, addressing concerns in industries like finance, healthcare, and government.

- Real-Time Decision Making: With inference performed directly on the device, on-device AI reduces latency to near-zero, critical for applications like autonomous systems, fraud detection, and customer service chatbots.

- Cost-Efficiency: By minimizing dependency on cloud resources, enterprises can significantly reduce operational expenses, particularly for tasks that require frequent AI inference.

- Scalability Across Devices: On-device AI agents can be deployed across a diverse range of hardware, from wearables and smart appliances to enterprise-grade edge servers, ensuring wide applicability.

Strategic Comparison with Competitors

Strengths of Shakti LLMs:

- Scalability: Shakti-2.5B dominates benchmarks across reasoning and factual knowledge tasks.

- Efficiency: The compact Shakti-500M and Shakti-100-Q4 models deliver unparalleled throughput on GPUs, CPUs, and mobile devices.

- Enterprise-Ready: Fine-tuned for industries like healthcare, finance, and retail, offering out-of-the-box support for real-world applications.

Where Shakti Leads:

- Reasoning: Industry-best performance in PIQA (86.2%) and WinoGrande (76.7%).

- Energy Efficiency: Shakti’s quantized models ensure low power consumption without compromising accuracy.

What makes Shakti Standout:

- Quantization and Memory Efficiency: Highlight how Shakti models are optimized for minimal memory usage while retaining high accuracy.

- Hardware Independence: Reinforce the hardware-agnostic capabilities of Shakti for integration into diverse infrastructures, from legacy servers to advanced edge platforms.

Enabling On-Device AI Agents with Shakti LLMs

The compact and efficient design of Shakti LLMs positions them as ideal for on-device AI agents. Key applications include:

- IoT and Edge AI: Powering smart home devices, predictive maintenance systems, and autonomous navigation with real-time insights.

For example, Shakti-100-Q4 supports smart home ecosystems by delivering seamless voice recognition and energy management insights, enabling intuitive and efficient user experiences.

- Healthcare: Shakti-2.5B supports clinical decision support systems, while Shakti-500M powers wearable health trackers with low-latency inference.

For example, Shakti-2.5B can be deployed in hospital systems to analyze patient records in real-time, enabling faster diagnostic decisions and improving patient outcomes.

- Workplace AI Agents: Embedded agents handle tasks like email prioritization, workflow automation, and data visualization, enhancing productivity.

For instance, Shakti-powered agents can prioritize and categorize high-importance emails in corporate environments, enabling faster responses to critical issues. Workflow automation powered by Shakti-500M can streamline HR processes like candidate shortlisting and employee onboarding, saving hours of manual effort. Additionally, Shakti-2.5B can generate real-time visual insights from sales or operational data in tools like PowerBI, helping teams make data-driven decisions during meetings.

- Retail and Customer Service: Real-time chatbots powered by Shakti deliver personalized customer experiences with minimal resource requirements.

For example, Retailers can integrate Shakti-500M into kiosks for personalized shopping experiences and instant query resolution, enhancing both efficiency and customer satisfaction.

The Role of Shakti in Workplace Transformation

On-device AI agents powered by Shakti LLMs have the potential to revolutionize how enterprises operate. Key applications include:

Intelligent Workplace Agents:

- Task Automation: Shakti-powered agents can automate repetitive tasks such as scheduling meetings, generating reports, and managing emails, enabling employees to focus on strategic activities.

- Real-Time Insights: Embedded in tools like PowerBI or Slack, Shakti agents can provide actionable insights during meetings, enhancing decision-making processes.

- Enhanced Collaboration: AI agents can facilitate cross-department collaboration by integrating with platforms like Microsoft Teams, generating instant summaries and to-do lists.

Industry-Specific Use Cases:

- Healthcare: Power clinical support systems, automate patient documentation, and enable real-time diagnostic tools.

- Retail and Customer Service: Deploy chatbots that handle inquiries, personalize customer recommendations, and provide 24/7 assistance.

- Manufacturing: Enable predictive maintenance by processing IoT sensor data in real-time, ensuring minimal downtime.

Unlocking the Strategic Value of Shakti LLMs for Enterprises

- Future-Proofing with Scalable AI Shakti models are hardware-agnostic, enabling seamless deployment across cloud, on-premises, and edge setups. This ensures long-term compatibility with evolving enterprise needs, providing flexibility to scale AI infrastructure as business requirements grow.

- Cost and Energy Efficiency The quantized architecture of Shakti models minimizes memory and computational requirements, leading to significant reductions in infrastructure costs. This makes AI adoption feasible for organizations of all sizes, while also optimizing power consumption for sustainable operations.

- Driving Competitive Advantage By deploying Shakti-powered on-device AI agents, enterprises can offer faster, reliable, and intelligent AI-driven services, elevating customer experiences and streamlining workforce productivity. Shakti’s superior performance in reasoning and throughput gives businesses a competitive edge in delivering innovative solutions.

- Fostering Innovation Shakti’s high throughput and compact architecture empower enterprises to build custom AI workflows tailored to specific industry needs. Whether it’s automating workflows, enhancing analytics, or enabling new use cases, Shakti provides a robust foundation for innovation.

Adoption Strategies Enterprises

- Leverage Shakti’s Scalability Strategically deploy Shakti models based on workload requirements: Shakti-2.5B: Ideal for large-scale, complex tasks such as enterprise-wide analytics, multi-turn conversational AI, and knowledge-driven workflows. Shakti-500M: Perfect for localized, lightweight AI use cases, including IoT devices, real-time decision-making, and edge deployments.

- Optimize Edge Infrastructure Pair Shakti models with edge computing environments to ensure low-latency inference and improve performance in real-time applications such as predictive maintenance, autonomous operations, and real-time customer engagement.

- Prioritize ESG Goals With their energy-efficient design, Shakti models help enterprises meet Environmental, Social, and Governance (ESG) goals. This enables organizations to adopt green AI initiatives by reducing the environmental impact of high-scale AI deployments while optimizing resource usage.

- Build AI-First Workplaces Create intelligent workplace agents powered by Shakti models to: Automate repetitive and low-value tasks, freeing employees for strategic initiatives. Enhance team collaboration through tools like real-time meeting analytics, automated to-do lists, and context-aware insights. Improve decision-making processes with AI-driven recommendations and actionable insights.

- Key Metrics: Track key performance indicators like latency reduction, energy savings, and accuracy improvements to measure the impact of Shakti deployments on operational efficiency.

- Pilot Programs: Begin with small-scale deployments in specific departments or business units to validate Shakti’s performance. Use pilot results to gather feedback and refine workflows before scaling organization-wide, ensuring a smoother adoption process.

- Leverage Shakti’s Scalability: Strategically deploy Shakti models based on workload requirements:

Shakti-2.5B: Ideal for large-scale, complex tasks such as enterprise-wide analytics, multi-turn conversational AI, and knowledge-driven workflows.

Shakti-500M: Perfect for localized, lightweight AI use cases, including IoT devices, real-time decision-making, and edge deployments.

Unlocking the Potential of On-Device AI Agents

The adoption of Shakti LLMs as the backbone for on-device AI agents is more than an operational shift—it’s a strategic imperative. By integrating these models into their ecosystems, enterprises can achieve:

- Autonomy: AI agents capable of operating without cloud reliance.

- Agility: Real-time processing for faster response times.

- Sustainability: Energy-efficient models that align with corporate ESG goals.

- Productivity: Enhanced employee output through intelligent automation.

A Vision for the Future: Shakti LLMs and Workplace AI

As the workplace evolves into a hub of intelligent systems, Shakti LLMs are at the center of this transformation. Their ability to empower on-device AI agents, paired with industry-leading benchmarks and scalability, ensures that enterprises can harness AI to its fullest potential.

Shakti LLMs combine benchmark-leading performance, hardware-agnostic flexibility, and unmatched throughput efficiency, making them a natural choice for on-device AI agents and enterprise applications. By adopting Shakti, enterprises can unlock the full potential of intelligent, sustainable, and scalable AI solutions, redefining how they operate in an increasingly AI-driven world.

Shakti represents the future of AI—delivering performance, efficiency, and real-world applicability in equal measure.